// AI Model Interface

interface IAIModel {

function predict(data: string) external returns (string);

function train(trainingData: string) external;

function getAccuracy() external view returns (number);

}

/// @title AI Model Deployment and Management Contract

/// @dev Enables deployment, training, and management of AI models

contract AIModelManager {

event ModelDeployed(address indexed modelAddress, string modelName);

event ModelTrained(address indexed modelAddress, number accuracy);

event ModelPrediction(address indexed modelAddress, string input, string output);

/// @notice Deploys a new AI model

/// @param modelName Name of the AI model

/// @param initialTrainingData Initial training data for the model

/// @return address Address of the deployed AI model contract

function deployModel(string memory modelName, string memory initialTrainingData)

external

returns (address)

{

AIModel newModel = new AIModel(modelName);

newModel.train(initialTrainingData);

emit ModelDeployed(address(newModel), modelName);

return address(newModel);

}

/// @notice Makes a prediction using an AI model

/// @param modelAddress Address of the AI model contract

/// @param input Input data for prediction

/// @return string Prediction result from the AI model

function makePrediction(address modelAddress, string memory input)

external

returns (string memory)

{

IAIModel model = IAIModel(modelAddress);

string memory result = model.predict(input);

emit ModelPrediction(modelAddress, input, result);

return result;

}

}

/// @title AI Model Implementation

/// @dev Basic implementation of an AI model with prediction capabilities

contract AIModel is IAIModel {

string public modelName;

number public accuracy;

mapping(string => string) private predictions;

constructor(string memory _modelName) {

modelName = _modelName;

}

function predict(string memory data) external returns (string memory) {

// In a real implementation, this would use ML algorithms

// Simplified for demonstration

if (predictions[data] != "") {

return predictions[data];

}

return "Prediction result";

}

function train(string memory trainingData) external {

// Training logic would be implemented here

accuracy = 0.85; // Example accuracy

}

function getAccuracy() external view returns (number) {

return accuracy;

}

}

Cloud KVM GPUs

Power your AI, machine learning, and graphics workloads with our enterprise-grade Cloud KVM GPU instances.

Enterprise-Grade Virtualization

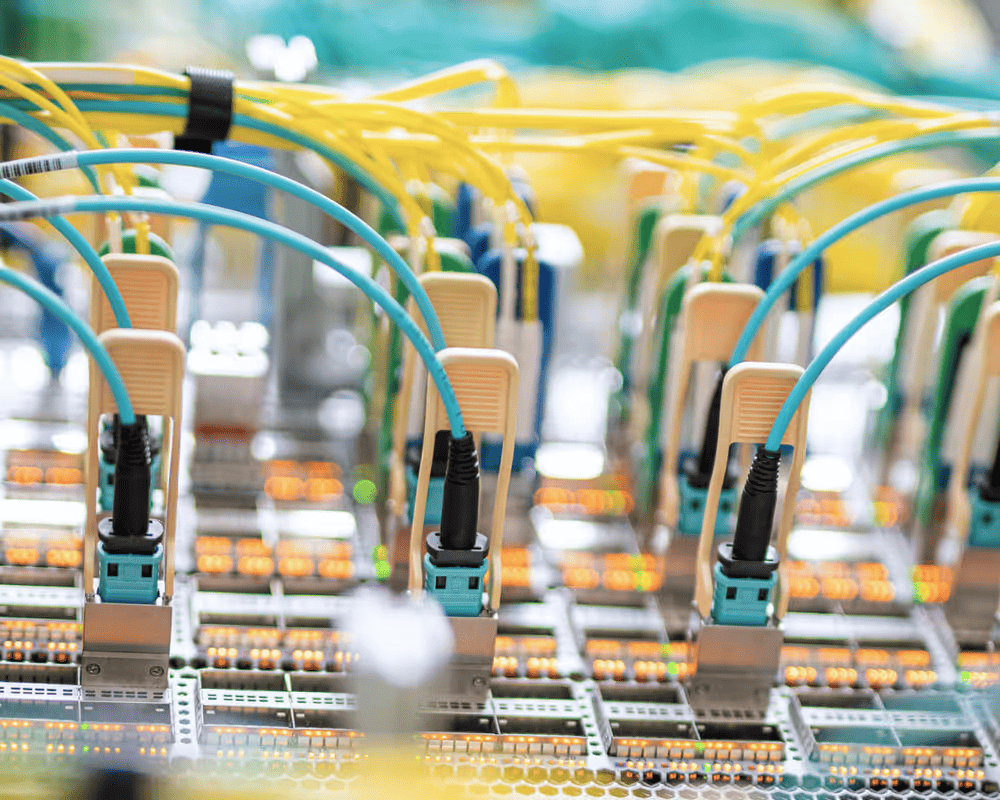

Our Cloud KVM GPU platform leverages cutting-edge virtualization technology to deliver dedicated GPU resources with minimal overhead.

Isolated GPU Resources

Each instance gets dedicated GPU time-slices and memory allocation for consistent performance.

Minimal Overhead

Advanced KVM virtualization delivers near-bare-metal performance for your GPU-intensive workloads.

On-Demand Resources

Scale your GPU resources up or down based on your computational needs and budget.

Multi-GPU Instances

Combine multiple GPUs in a single instance for massive parallel processing power.

Key Features

Host your hardware in our enterprise-grade data centers with 24/7 support, redundant power, and advanced security.

-

Dedicated GPU Resources

-

Isolated GPU cores for consistent performance

-

High-Speed Networking

-

10Gbps+ connectivity for data-intensive workflows

-

Flexible Scaling

-

Scale up or down based on your computational needs

-

Secure Environment

-

Encrypted data storage and isolation between instances

-

24/7 Monitoring

-

Proactive monitoring and maintenance

-

Customizable Configurations

-

Tailor GPU, CPU, RAM, and storage to your requirements

Optimized for Every Workload

import tensorflow as tf

# Configure TensorFlow to use Cloud KVM GPU resources

gpus = tf.config.experimental.list_physical_devices('GPU')

if gpus:

try:

# Enable memory growth for efficient resource utilization

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

# Create a model optimized for Cloud KVM GPU

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(224, 224, 3)),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile with GPU-optimized settings

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

except RuntimeError as e:

print(e)Data that drives change, shaping the future

Decentralized, secure, and built to transform industries worldwide. See how our platform enables sustainable growth and innovation at scale.

Our platform not only drives innovation but also empowers businesses to make smarter, data-backed decisions in real time. By harnessing the power of AI and machine learning, we provide actionable insights that help companies stay ahead of the curve.

Frequently Asked Questions

If you can't find what you're looking for, email our support team and if you're lucky someone will get back to you.

What GPU models are available in your Cloud KVM GPU offering?

We offer a wide range of NVIDIA and AMD GPU models, including A100, H100, RTX 4090, MI250, and more. Contact our sales team for the full list of available GPUs in our Cloud KVM GPU platform.

How is performance isolation ensured between Cloud KVM GPU instances?

Our KVM-based virtualization provides strong performance isolation through dedicated GPU time-slicing and memory allocation. Each Cloud KVM GPU instance gets guaranteed GPU resources.

Can I customize the CPU, RAM, and storage along with the GPU?

Absolutely. We offer fully customizable Cloud KVM GPU configurations to match your specific workload requirements. Choose from various CPU cores, RAM sizes, and storage options.

What is the typical setup time for a Cloud KVM GPU instance?

Most Cloud KVM GPU instances are provisioned within minutes. For custom configurations or special requirements, it may take up to a few hours.

Do you offer any management tools for monitoring Cloud KVM GPU utilization?

Yes, our dashboard provides real-time monitoring of Cloud KVM GPU utilization, temperature, memory usage, and other key metrics. We also support integration with popular monitoring tools.